How Meta Scales XFaaS to Millions of Serverless Calls per Second

- Published on

Understanding how Meta executes millions of serverless function calls per second—adding up to trillions of invocations per day—is one of the most fascinating examples of hyperscale distributed system design. XFaaS, Meta’s internal Function-as-a-Service platform, powers background workloads across Facebook, Instagram, and WhatsApp with an architecture optimized for throughput, elasticity, cost efficiency, and global resiliency.

For CTOs, staff engineers, and distributed systems architects, analyzing XFaaS reveals critical lessons in queuing systems, global scheduling, back-pressure, and ultra-high-density compute utilization.

1. Why XFaaS Exists — And Why Meta Needed Its Own Serverless Platform

XFaaS isn’t AWS Lambda or Google Cloud Functions. It’s an internal platform designed for background tasks, not interactive traffic. Meta needed a system capable of processing massive asynchronous workloads without spinning up thousands of long-running services.

XFaaS is optimized for:

- Throughput over latency

- Delay-tolerant workloads

- Hardware utilization at extreme scale

- Global workload rebalancing

- Cost efficiency

User-facing systems still rely on traditional services—but XFaaS handles the firehose of async tasks that keep Meta’s ecosystem running.

2. The Core of XFaaS: A Multi-Stage Invocation Pipeline

To sustain millions of function calls per second, XFaaS uses a layered architecture where each component owns a precise function, ensuring reliability and smooth flow under extreme load.

How an Invocation Flows Through XFaaS

1. Submitters → QueueLB → DurableQ

Submitters send requests to the Queue Load Balancer, which spreads invocations across sharded DurableQ instances. These queues:

- absorb burst traffic

- guarantee durability

- smooth traffic for downstream schedulers

2. Scheduler → FuncBuffer → RunQ

Schedulers pull from DurableQ, apply:

- deadlines

- fairness policies

- tenant quotas

- back-pressure rules

- priority scoring

Then publish ready work to RunQ, an ultra-fast in-memory execution queue.

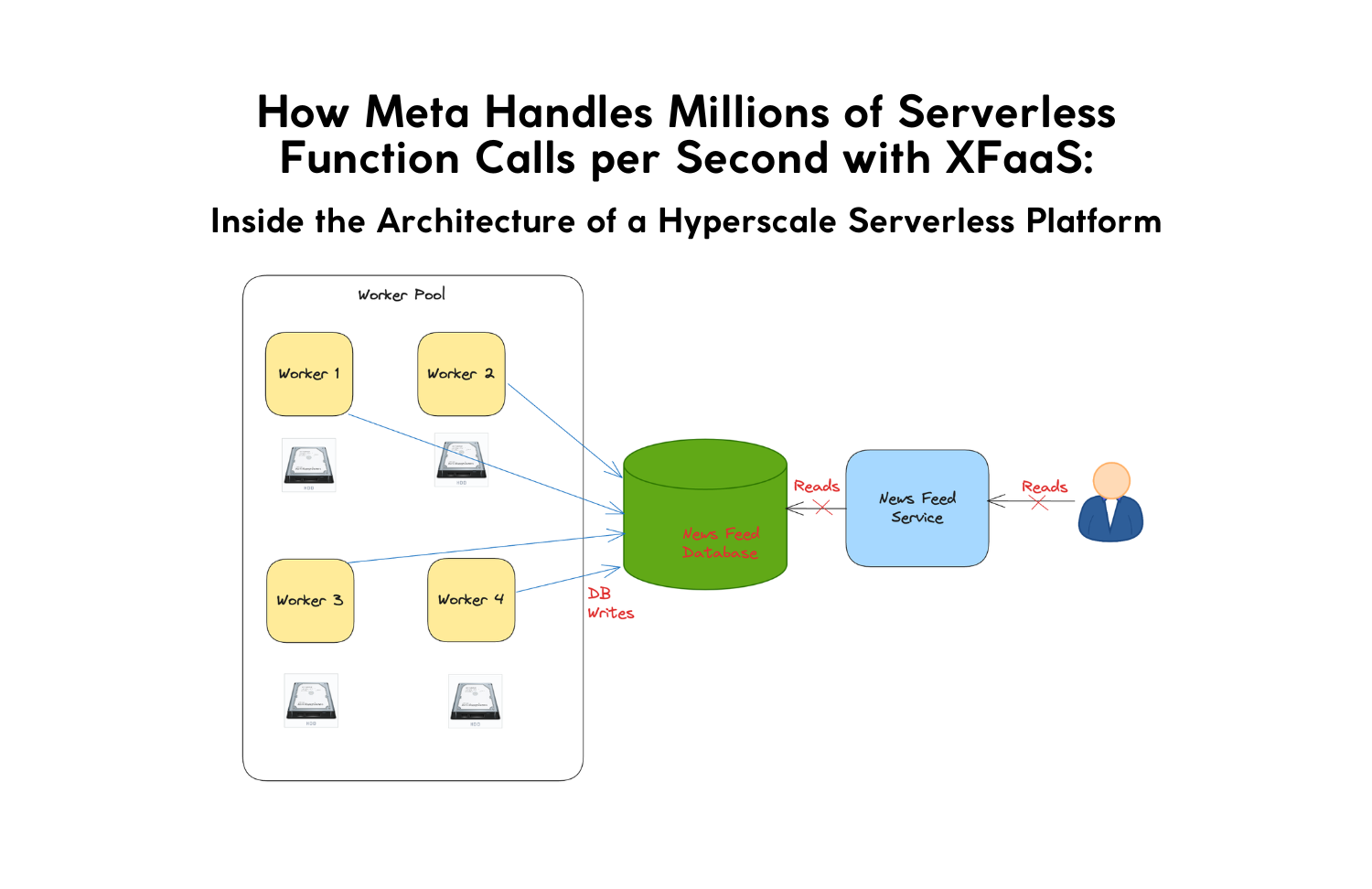

3. WorkerLB → Worker Pools

WorkerLB distributes tasks across tens of thousands of warm workers, already preloaded with runtimes and functions for minimal cold starts.

Meta’s internal trust model enables:

- multi-tenant worker processes

- shared memory pools

- batched execution

resulting in extreme compute density.

3. Why XFaaS Scales: Meta’s Key Hyperscale Optimizations

Meta can’t simply “add more servers.” At XFaaS scale, architecture matters more than raw hardware.

Here are the design principles enabling trillions of daily invocations:

1. Delay-Tolerant Execution & Time-Shifting

Not all workloads require immediate execution. XFaaS classifies jobs by tolerance levels, enabling controlled delays for tasks like:

- ML preprocessing

- analytics pipelines

- ranking-signal processing

- notifications

- offline enrichment

This flattens spikes and maximizes hardware utilization.

2. Global Load Balancing Through GTC

The Global Traffic Conductor (GTC) continuously monitors regional load and rebalances work worldwide.

This enables:

- region-level failover

- global pooling of compute

- lower overprovisioning

- improved resiliency

3. Intelligent Back-Pressure

XFaaS protects downstream systems using:

- adaptive throttling

- function-level pacing

- tenant quotas

- latency-based feedback loops

When a downstream dependency slows down, XFaaS automatically reduces throughput to avoid cascading failures.

4. High-Density Worker Execution

Because it’s internal, Meta can optimize workers beyond what public clouds allow:

- shared runtimes

- co-located functions

- collaborative JIT

- warm code caches

- batched executions

This drastically reduces cost per invocation.

4. Strengths and Trade-Offs: XFaaS Isn’t Built for Everything

Strengths

- Handles trillions of invocations/day

- Global workload balancing

- Extremely high hardware utilization

- Massive cost savings over traditional services

- Graceful degradation under pressure

Trade-Offs

- Not optimized for < 100ms latency

- Operationally complex

- Works only due to Meta’s internal trust and uniform hardware

- Requires sophisticated global orchestration

XFaaS is intentionally tuned for throughput, not interactivity.

5. What Engineering Teams Can Learn from XFaaS

Even companies with far smaller workloads can apply these principles:

1. Separate async from synchronous workloads

Don’t run asynchronous tasks inside latency-sensitive services.

2. Implement back-pressure everywhere

Downstream-aware throttling prevents system-wide failures.

3. Use multi-zone or multi-region distribution

Even at small scale, it improves resilience.

4. Split durability and execution layers

Durable queues + in-memory schedulers = optimal performance.

5. Warm workers reduce costs and improve predictability

Even partial warm-starts improve throughput.

Conclusion

Meta’s XFaaS is one of the largest and most sophisticated serverless platforms on the planet. Its combination of durable queues, global balancing, worker consolidation, and intelligent scheduling demonstrates what’s possible when serverless architectures are built for hyperscale rather than generic cloud workloads.

While few companies will ever need XFaaS-level throughput, the architectural principles behind it offer powerful guidance for building resilient, cost-efficient, high-throughput async systems.

At JMS Technologies Inc., we design distributed platforms using these same principles for clients who require reliability, elasticity, and scale.

Need to design a large-scale serverless or async execution platform? Let’s talk.