How LLMs Like ChatGPT Work: A Deep Technical Breakdown for Modern Engineering Teams

- Published on

Large Language Models (LLMs) like ChatGPT have quickly become some of the most influential technologies of the decade. From intelligent assistants to coding copilots, these models are transforming how we design software, build products, and operate technical organizations.

But what exactly happens inside these systems? How are they able to generate coherent responses, solve complex problems, and adapt to different contexts?

This article provides a detailed, practical explanation of how modern LLMs work—from core architecture to training pipelines and the mechanisms that enable their apparent reasoning. The goal is to help CTOs, founders, and engineering leaders understand not just the hype, but the actual mechanics behind models like ChatGPT and what they mean for production systems.

1. What Exactly Is an LLM and Why Is It So Powerful?

A Large Language Model (LLM) is a deep learning model trained to understand and generate natural language. On the surface, it seems like an advanced text generator. But its true reach is much broader: it can analyze code, write technical documentation, explain mathematical concepts, generate software architectures, and simulate step-by-step decision-making.

This is possible because LLMs learn patterns, semantic relationships, and logical structures from massive datasets. Instead of memorizing sentences, an LLM builds a statistical map of language and contextual knowledge.

At its core, an LLM is a next-token prediction machine—but one so large and sophisticated that advanced behaviors emerge that resemble reasoning.

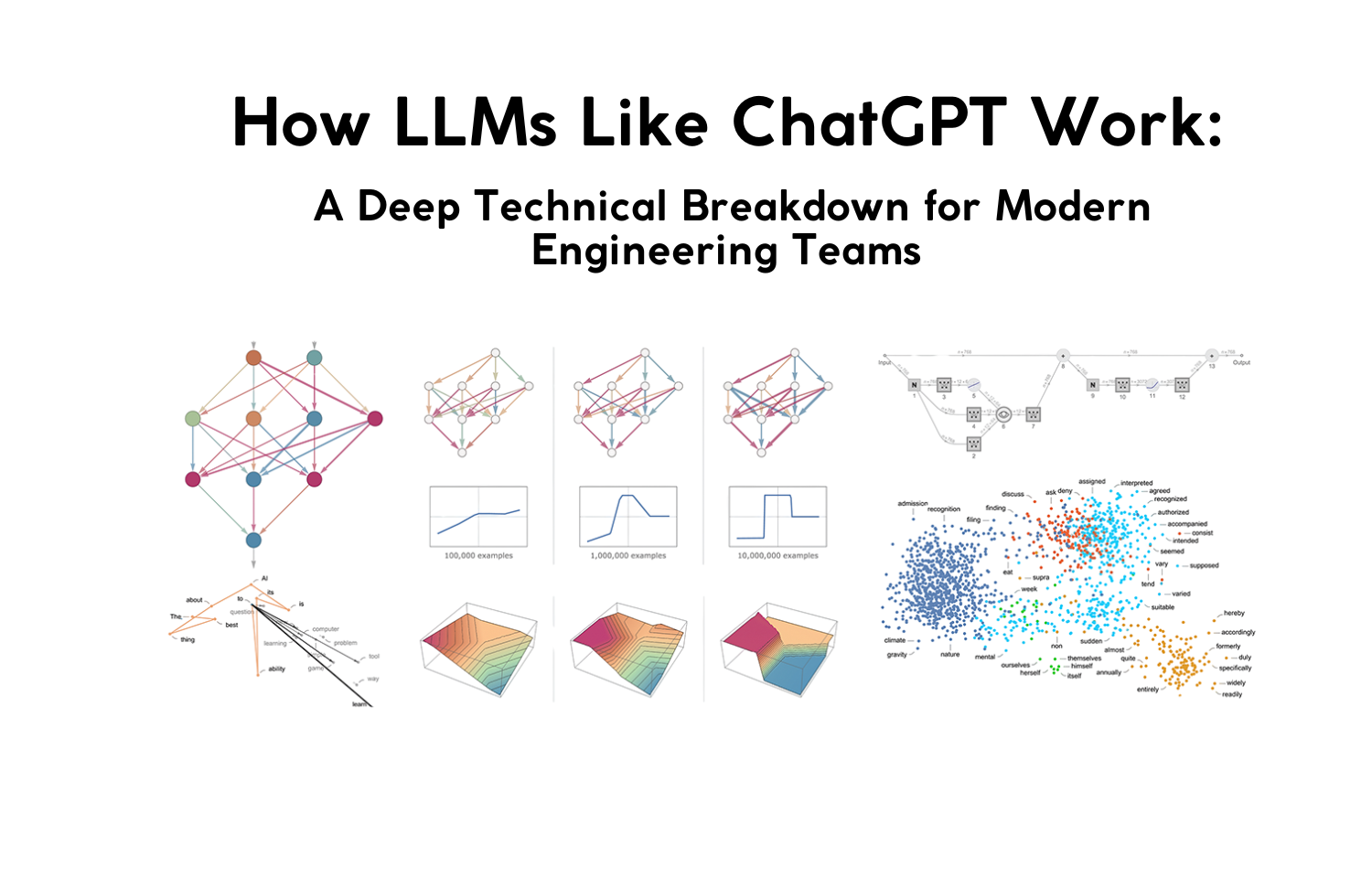

2. The Key Architecture: Transformers

The turning point for modern LLMs came with the Transformer architecture, introduced in 2017 in the paper “Attention is All You Need.” Before Transformers, sequential models (LSTM, GRU) struggled with parallelization and understanding long-range dependencies.

Transformers solved both problems through several key mechanisms:

2.1 The Engine: Self-Attention

Self-attention allows the model to determine which parts of a sentence are relevant when interpreting or generating the next token. It functions like an intelligent spotlight: the network learns to focus on the right words without human intervention.

Example: In the sentence: "The system crashed because it ran out of memory." Attention helps the model understand that "it" refers to "the system."

2.2 Feed-Forward Networks

After attention is calculated, the model runs the results through dense neural layers that transform and abstract the information.

2.3 Embeddings and Semantic Representations

Words and tokens are converted into high-dimensional vectors. In this vector space, technical terms like “JavaScript” will sit closer to “TypeScript” than to “banana.” This semantic proximity is essential for understanding technical context.

2.4 Positional Encoding

Because Transformers do not process text sequentially (they process it in parallel), they require positional signals to understand word order. Without this, "The dog chased the cat" and "The cat chased the dog" would look mathematically identical to the model.

3. How LLMs Are Trained

Training a production-grade LLM happens in three major phases.

3.1 Massive Pre-Training

Here, the model learns language itself. It is trained on trillions of tokens from books, documentation, technical blogs, academic papers, web pages, and code.

The objective is simple: Predict the next word.

But at a huge scale, this objective gives rise to:

- Grammatical understanding

- Programming structures

- Cause-and-effect relationships

- Reasoning patterns

- Scientific concepts

The result is broad, general knowledge embedded within the model’s neural weights.

3.2 Supervised Fine-Tuning (SFT)

In this phase, the model is trained with high-quality human examples:

- Clear explanations

- Step-by-step reasoning

- Clean code solutions

- Structured answers

This teaches the model “how it should respond” in real-world situations.

3.3 RLHF (Reinforcement Learning from Human Feedback)

This is where models like ChatGPT become conversational and helpful.

- The model generates several possible responses.

- Human experts rank them.

- A reward model learns the pattern of those preferences.

- The LLM is optimized to produce the best-rated responses.

Instead of optimizing only for mathematical accuracy, it optimizes for human usefulness.

4. How LLMs Generate Responses

Once trained, response generation follows these steps:

- Tokenization: Convert user input into numerical tokens.

- Attention Processing: Determine which parts of the context matter.

- Prediction: Choose the most likely next token (or sample from a distribution).

- Iteration: Add the new token to the sequence and repeat.

Techniques like temperature, top-k sampling, and beam search are used to adjust creativity and determinism during this process.

5. Where Does the “Knowledge” Come From?

It is crucial to understand that LLMs do not query a database.

- They do not store exact text unless it appears repeatedly in the training data (overfitting).

- Their knowledge is encoded in distributed patterns across their weights: maps of language, syntax, semantics, and correlations.

Example: The model does not memorize: "React is a JavaScript library for building interfaces." Instead, it learns that "React" appears in high-probability contexts with "components," "UI," "state," and "JavaScript." These probabilistic relationships allow it to generate meaningful answers.

6. Do LLMs Actually Reason?

LLMs do not reason like humans, nor do they run symbolic logic or internal algorithms.

Instead, they simulate reasoning using patterns learned from millions of examples where humans explain solutions, break down problems, and follow logical steps. The simulation is convincing enough to solve complex engineering tasks, but it is fundamentally probabilistic, not logical.

7. Limitations You Must Understand

Despite their capabilities, LLMs have real constraints that engineering teams must plan for:

- Hallucinations: They can confidently state incorrect facts.

- No Persistent Memory: Standard models do not "remember" past sessions without external context injection.

- Ambiguity Struggles: They require precise prompting.

- Data Cutoffs: They cannot verify live facts without tool access (like search).

- Infrastructure Cost: High inference costs for massive scale.

8. Infrastructure and Scalability

Running models like ChatGPT requires serious engineering.

8.1 Specialized Hardware

Clusters of high-performance GPUs (A100, H100, MI300X) or TPUs connected via high-bandwidth networking (InfiniBand) are required to handle the massive compute loads.

8.2 Parallelism Strategies

To split huge models across hardware, engineers use:

- Tensor parallelism

- Pipeline parallelism

- Model parallelism

8.3 Inference Optimizations

To reduce latency and cost in production:

- Quantization: Reducing precision (e.g., from 16-bit to 4-bit) with minimal accuracy loss.

- KV Caching: Caching attention calculations to speed up token generation.

- Speculative Decoding: Generating multiple tokens in parallel to increase throughput.

9. What’s Coming Next: The Future of LLMs (2025–2026)

The landscape is evolving rapidly. Key trends for the immediate future include:

- Advanced Multimodal Models: Native understanding of text, image, audio, and video simultaneously.

- Autonomous Agents: Models that can plan and execute multi-step tasks.

- RAG 2.0: Deep integration with vector databases for real-time accuracy.

- Structured Reasoning: Tree-of-thought prompting and hybrid neuro-symbolic systems.

- Small Language Models (SLMs): Efficient, domain-specific models that run on-device.

LLMs will not replace engineers, but they are becoming a foundational layer of the modern software stack.

Conclusion

Large Language Models operate through a sophisticated combination of Transformer architecture, massive training, human fine-tuning, and advanced attention mechanisms. They do not "think" like humans, but their statistical reasoning capabilities are powerful enough to solve practical engineering, design, and business problems.

For CTOs, founders, and technical teams, understanding how these models work is key to building reliable, scalable, and innovative AI-powered systems.

At JMS Technologies Inc., we specialize in helping companies navigate this new era of AI engineering. From integrating LLMs into existing products to building custom AI infrastructure, we ensure your technology stack is ready for the future.

Ready to integrate AI into your platform? Let's build the future together.